TLDR;

A way to measure augmentation performance.

You can refer to the original paper here.

What is Affinity and Diversity in data augmentation and why you should use it?

Augmentations in deep learning have proven to work. But it is hard to explain how. It has been assumed that they work because they simulate realistic samples from the true data distribution:

“[augmentation strategies are] reasonable since the transformed reference data is now extremely close to the original data. In this way, the amount of training data is effectively increased” (Bellegarda et al., 1992).

“overlapping but different” (Bengio et al., 2011).

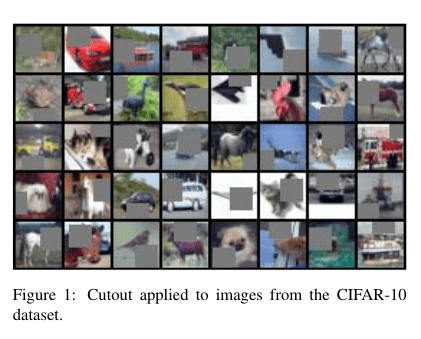

But it does not explain why Cutout or Mixup are working. It reduces the number of information on the image, or place information from two sources. And for some reason Cutout is useful for CIFAR-10, but not for ImageNet.

And there is no simple way to say what will work for different cases. Simple elastic distortions were used on MNIST successfully, but on ImageNet random crops or horizontal flips work better. In object detection, it makes more sense to use cut-and-paste or center crop.

To find what is working, and what’s not, people burned thousands of GPU-hours in brute-force approaches trying to find the best augmentations policy.

But studies are not focusing on understanding which augmentations should be picked, and how to make it easy.

Affinity and Diversity

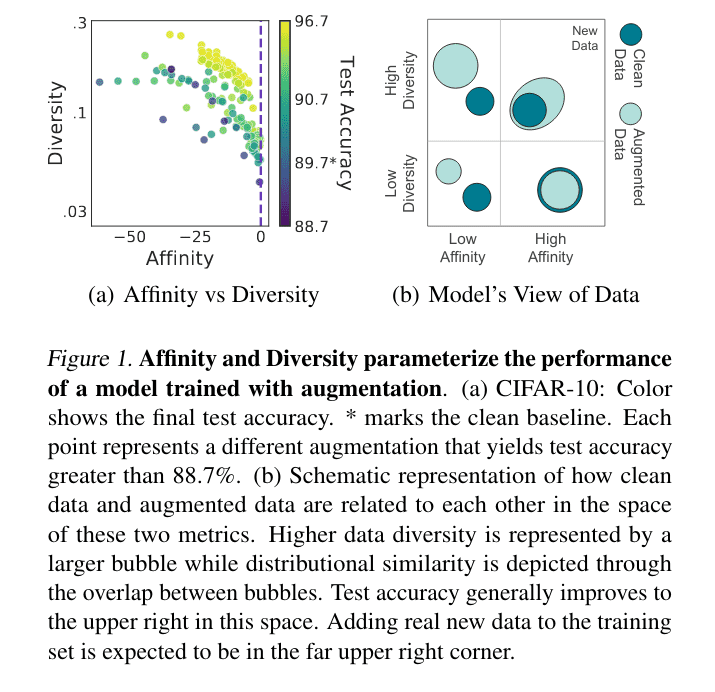

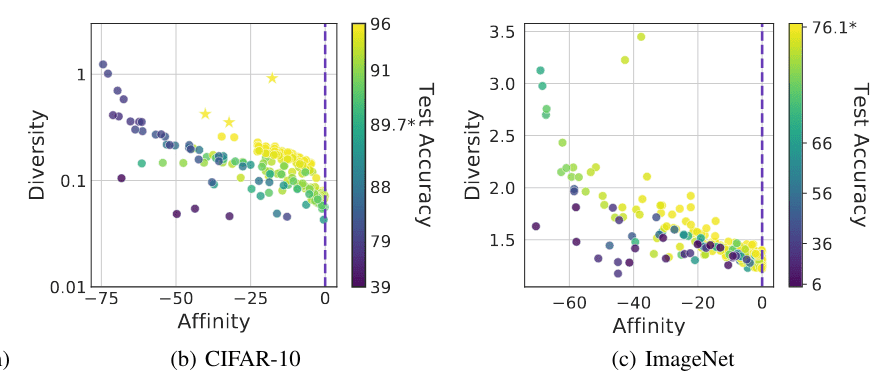

Authors found two key independent metrics:

Affinity - how much an augmentation shifts the training data distribution.

Diversity - complexity of the augmented sample for model and learning schedule.

Augmentation performance depends on both of them.

For a fixed level of Diversity, augmentations with higher Affinity are consistently better. Similarly, for a fixed Affinity, it is generally better to have higher Diversity.

And how does it help me?

You can test new augmentation on affinity and diversity jointly:

Affinity - pass augmented sample to a model trained without augmentations.

Diversity - train model with augmentation and compare training loss with model without this augmentation.

It will help you to test which augmentation work better in your case, and increasing affinity and diversity inform how useful the additional training examples are.

Other insights from paper, how can I use augmentations better?

- Apply augmentations dynamically, or not every time. Static augmentation never improves performance.

- If you are selecting augmentation to use - use affinity/diversity metrics to estimate how it is beneficial.

- Turn off non-helping augmentations - it is beneficial.

- Use already present packages like albumentations or volumentations, everything is ready and allows to apply augmentations dynamically.